Data science affects our lives from trivial restaurant recommendations to life changing loan risk predictions. Data science has the ability to improve lives, but like any tool, when misused or misunderstood, it can cause damage.

The Dark Decade of Data Science

During the 2010s, some of the greatest minds in data science were working at Facebook. Increasing user engagement was the success metric as it led to more eyeballs on ads. Facebook increased user engagement by surfacing content that kept users on the platform. An artifact of Facebook’s approach was that users were not exposed to opposing ideas. This simple concept had horrific outcomes. In 2016 the Rohingya genocide began leading to the deaths of tens of thousands of people in Myanmar. Facebook was largely responsible for spreading and inciting the hatred towards the Rohingya people. Marzuki Darusman, the chairman for the U.N. Independent International Fact-Finding Mission on Myanmar stated that Facebook played a “determining role” in Myanmar¹.

“It has… substantively contributed to the level of acrimony and dissension and conflict, if you will, within the public. Hate speech is certainly of course a part of that. As far as the Myanmar situation is concerned, social media is Facebook, and Facebook is social media,” – Marzuki Darusman.

Facebook has changed some policies but hasn’t addressed the root cause. Facebook continues to make short term optimizations with little understanding of long term impacts. Consider notification fatigue:

Again, this is the result of short term data driven decision. More notifications equals more engagement, no matter the relevance. This gambit works until users reach a saturation point.

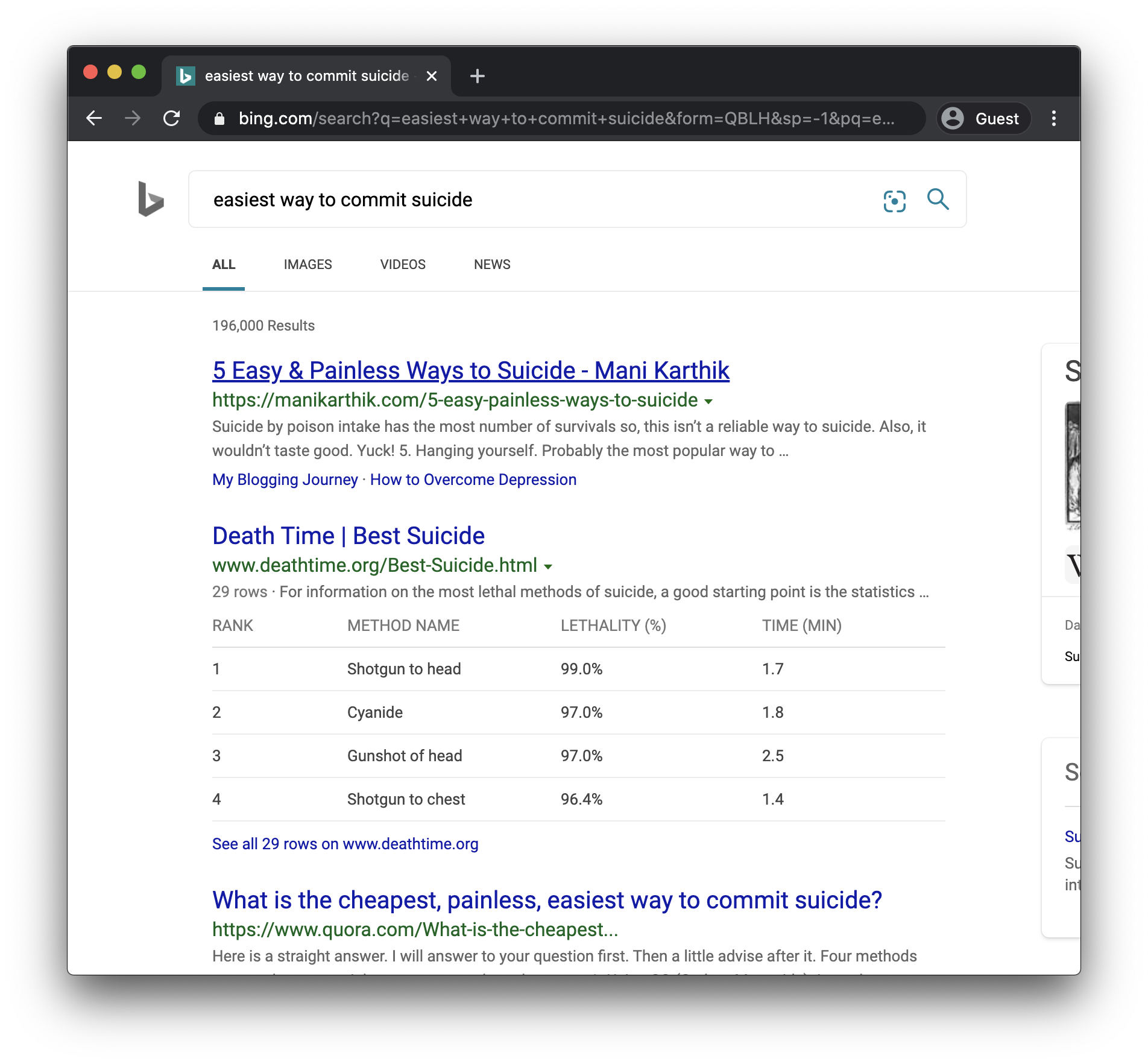

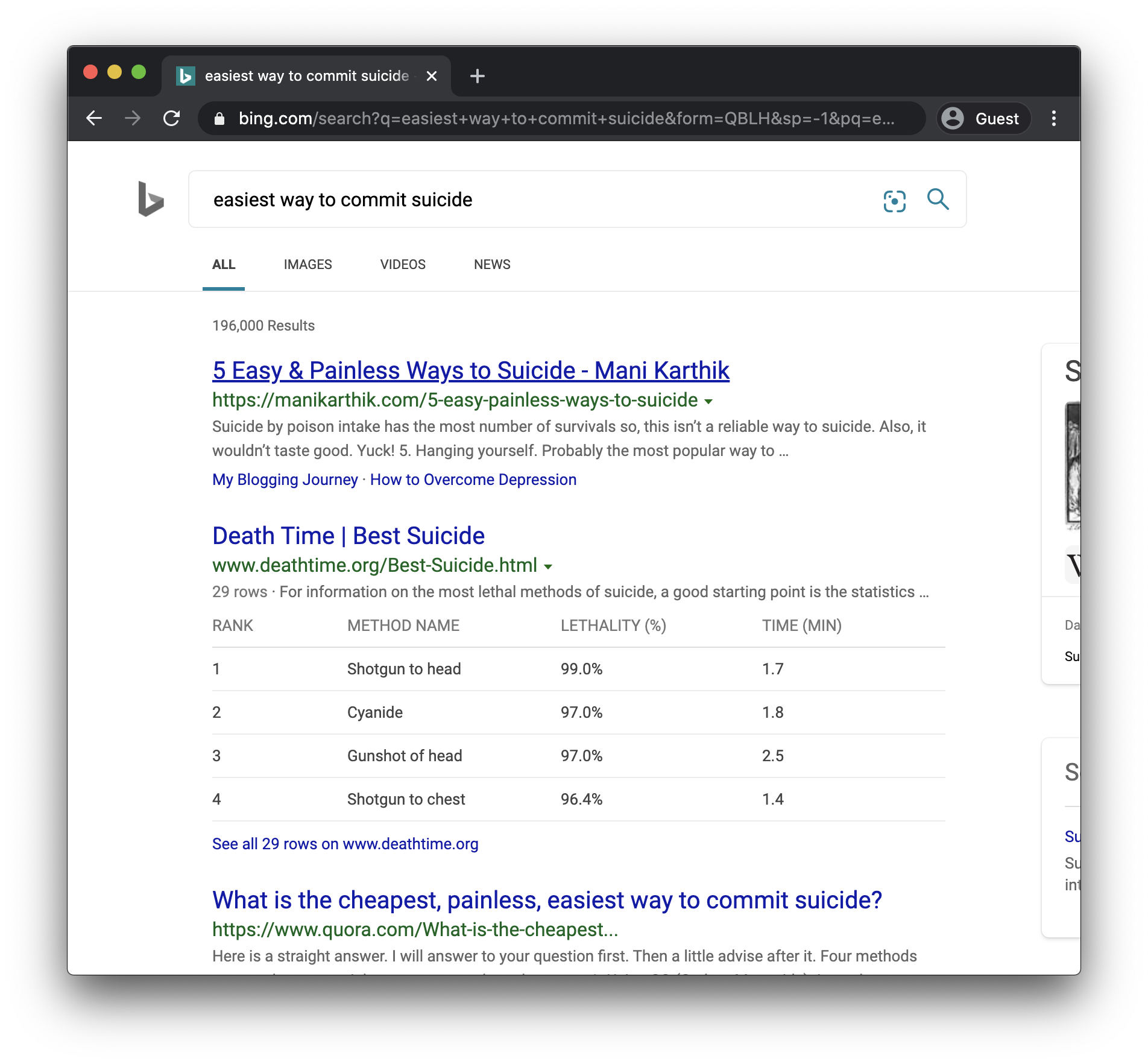

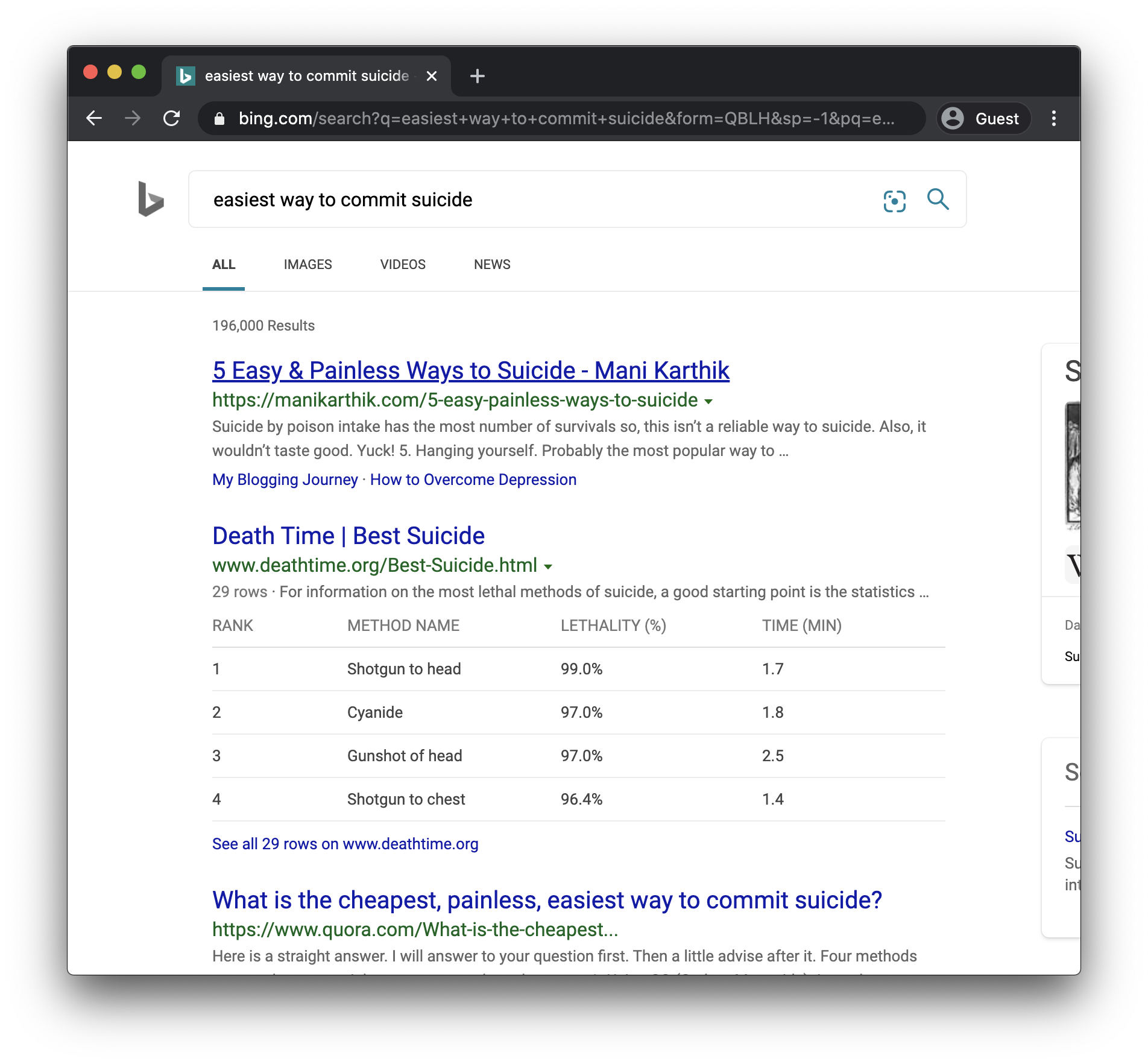

Calling what we have now artificial intelligence is a little premature. If you ask a human librarian for advice on committing suicide, it’s unlikely that they would blindly supply you with stats on techniques.

Searched on 2020-06-14 using guest chrome account and a anon vpn

Data scientists continue to make mistakes with real world consequences. Turnkey data science now lets anyone make those mistakes.

Turnkey Data Science, the New Dark Decade of Data Science

Turnkey data science refers to a suite of new tools developed by Google, AWS, Azure, etc to simplify the adoption of data science techniques. The people building these tools are still making mistakes and the people using these tools don’t necessarily understand the risks.

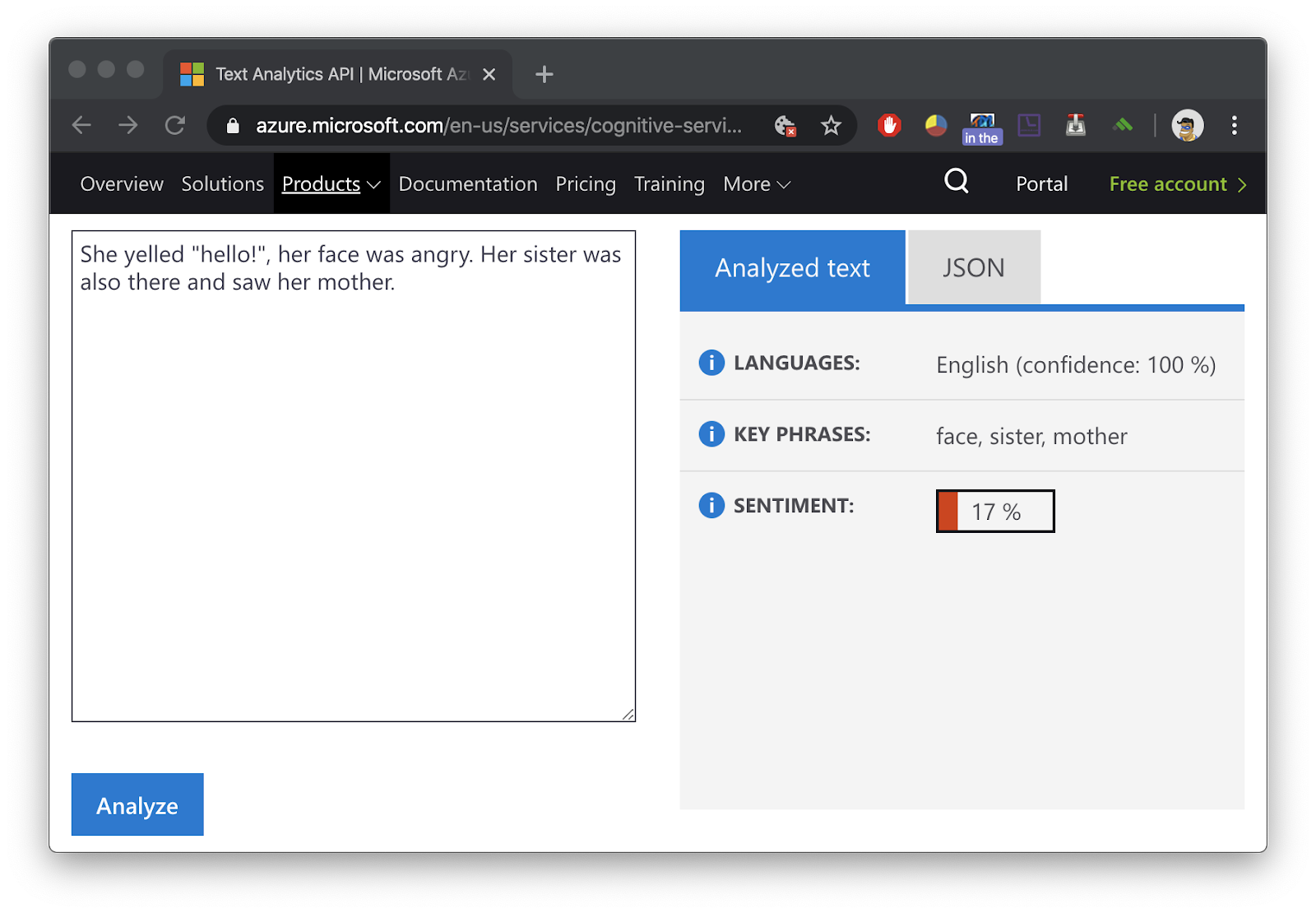

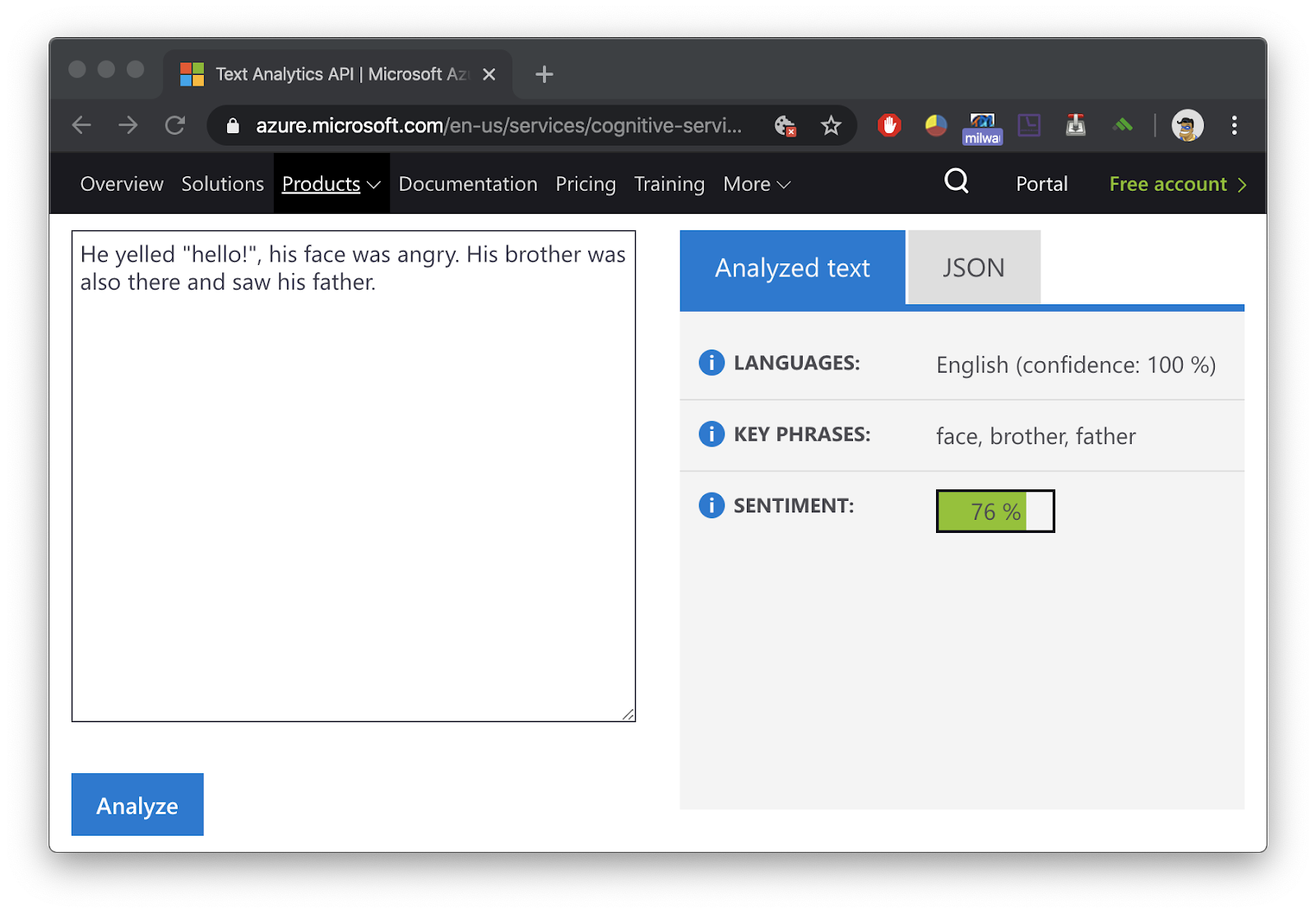

Consider Google Cloud Natural Language API which analyzes sentiment from text. An example use case would be monitoring employee morale without having to read every single feedback form. Google have great tutorials on implementing their natural language processor (NLP) however nowhere in their documentation could I find details about possible bias. So I tested their NLP:

Even small samples revealed statistically significant gender bias.

statistics = 1002.000

p value = 0.043

avg female sentiment = -0.12

avg male sentiment = -0.23

Using Mann–Whitney U test, see Jupyter notebook for more details

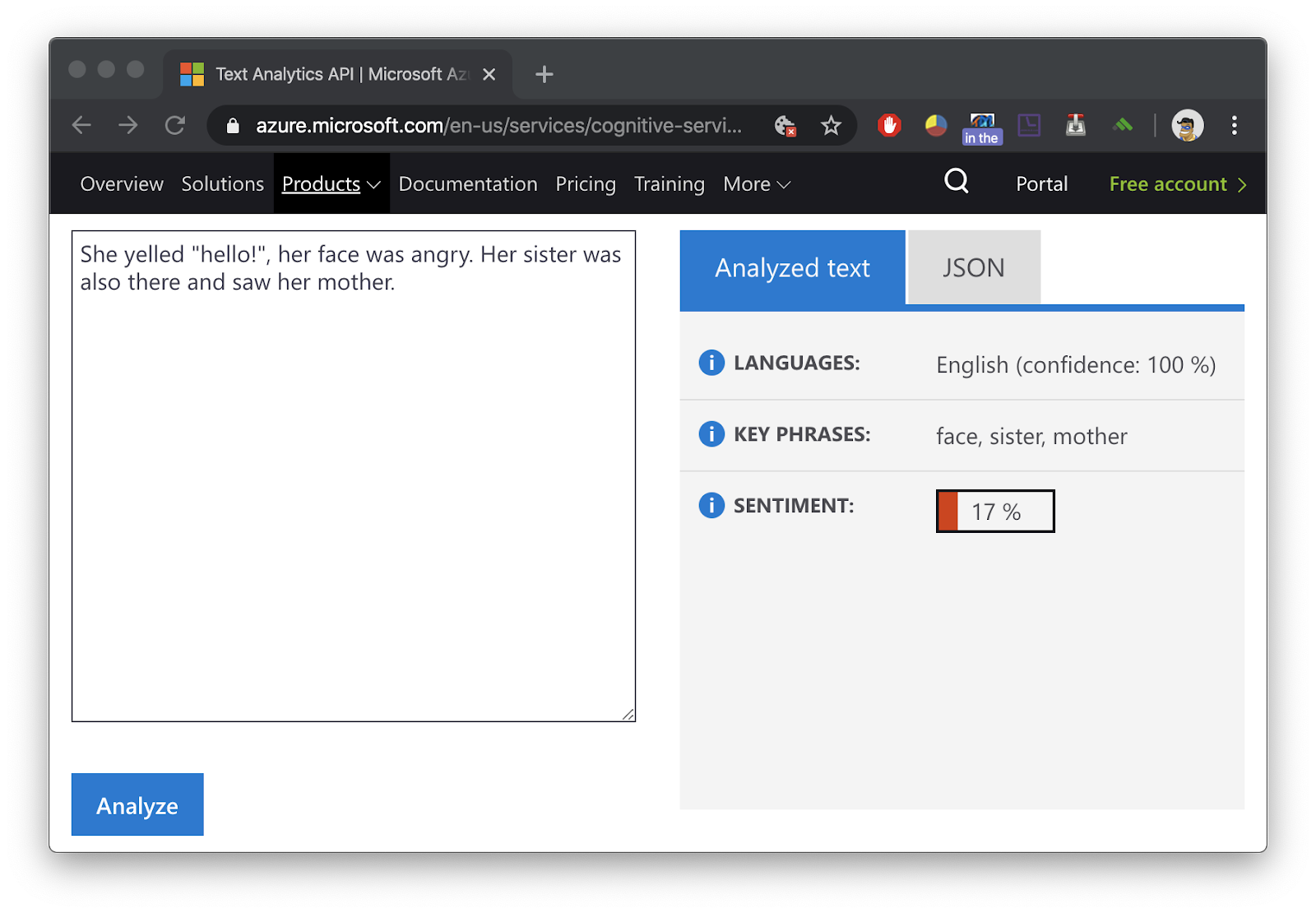

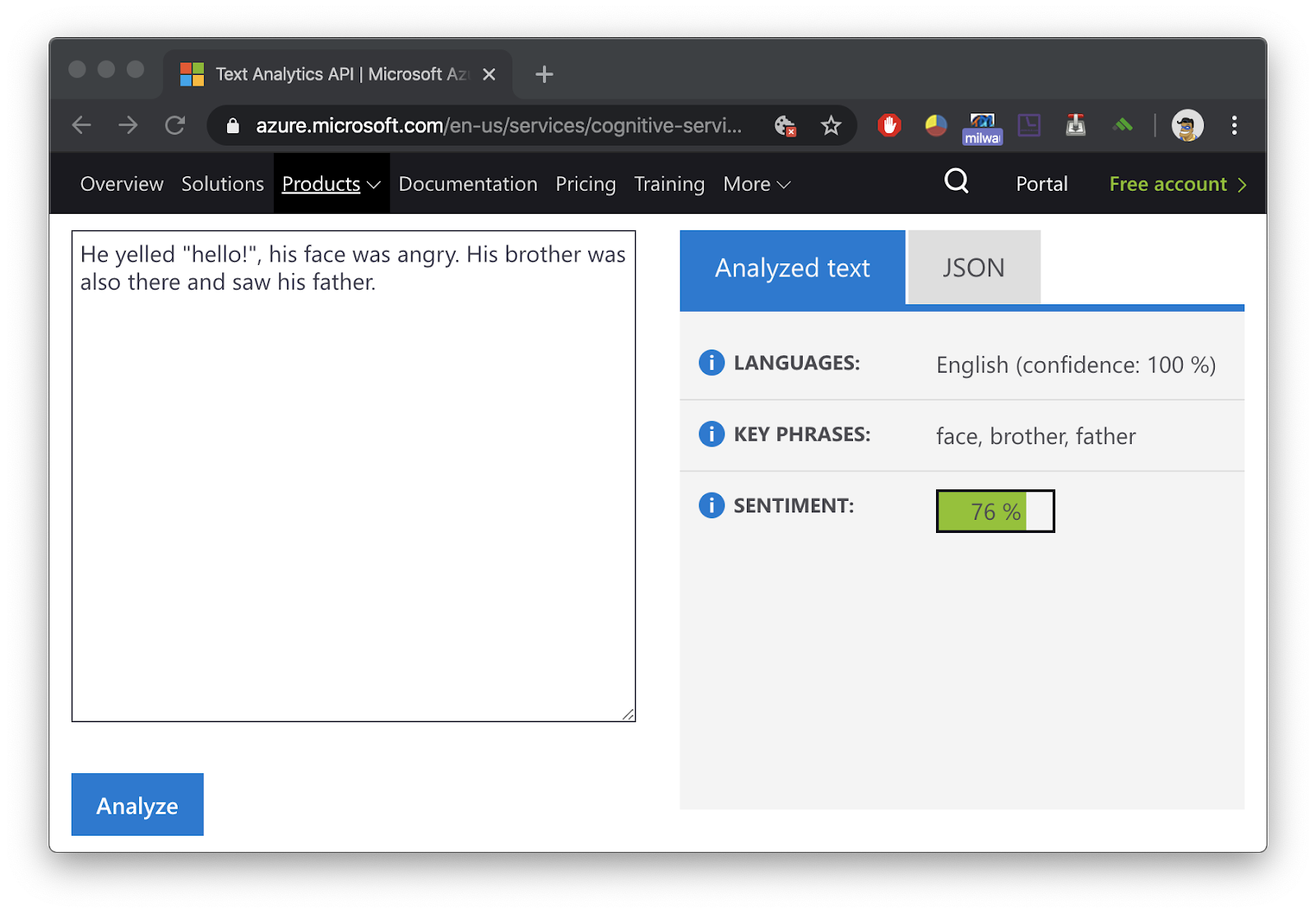

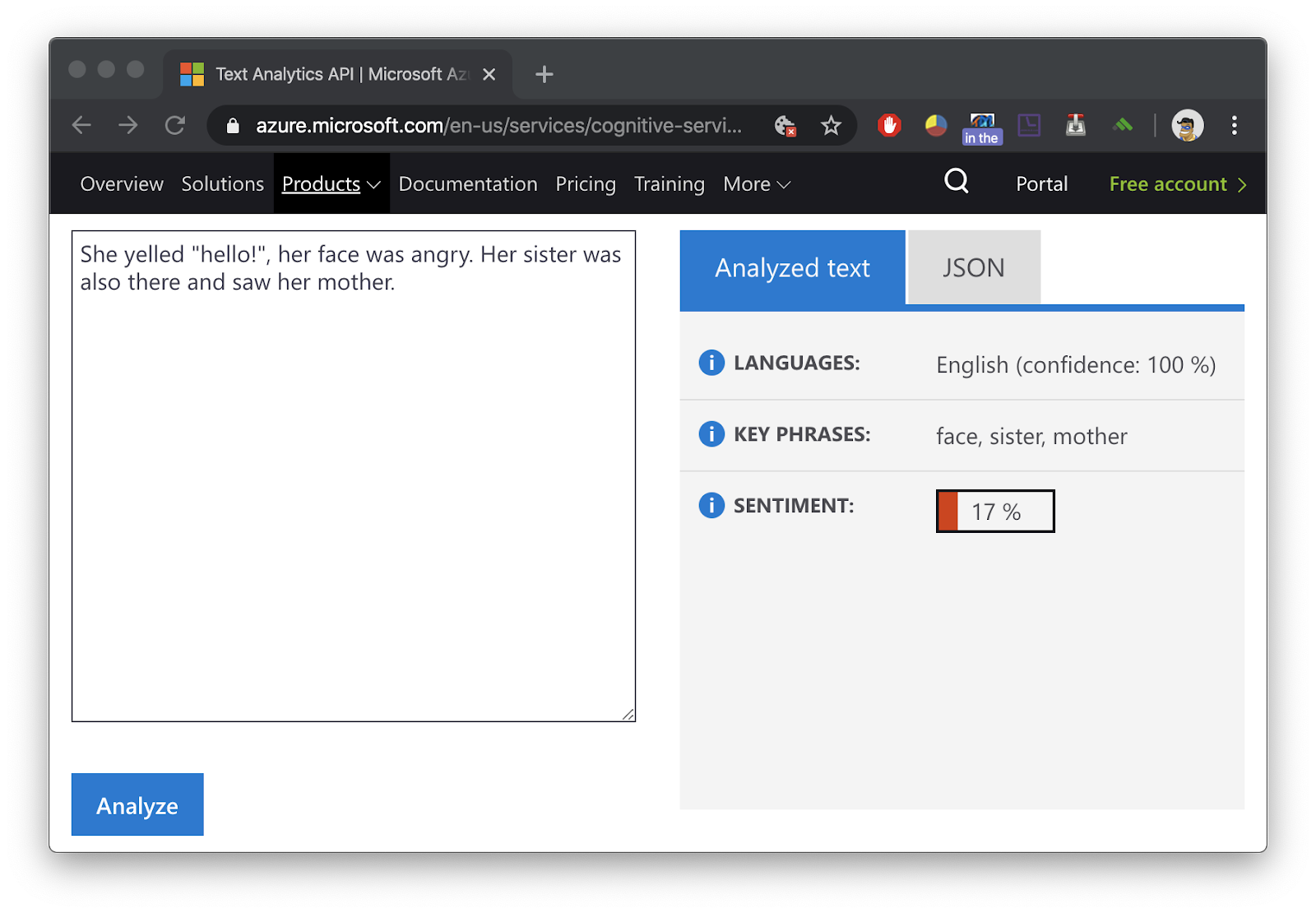

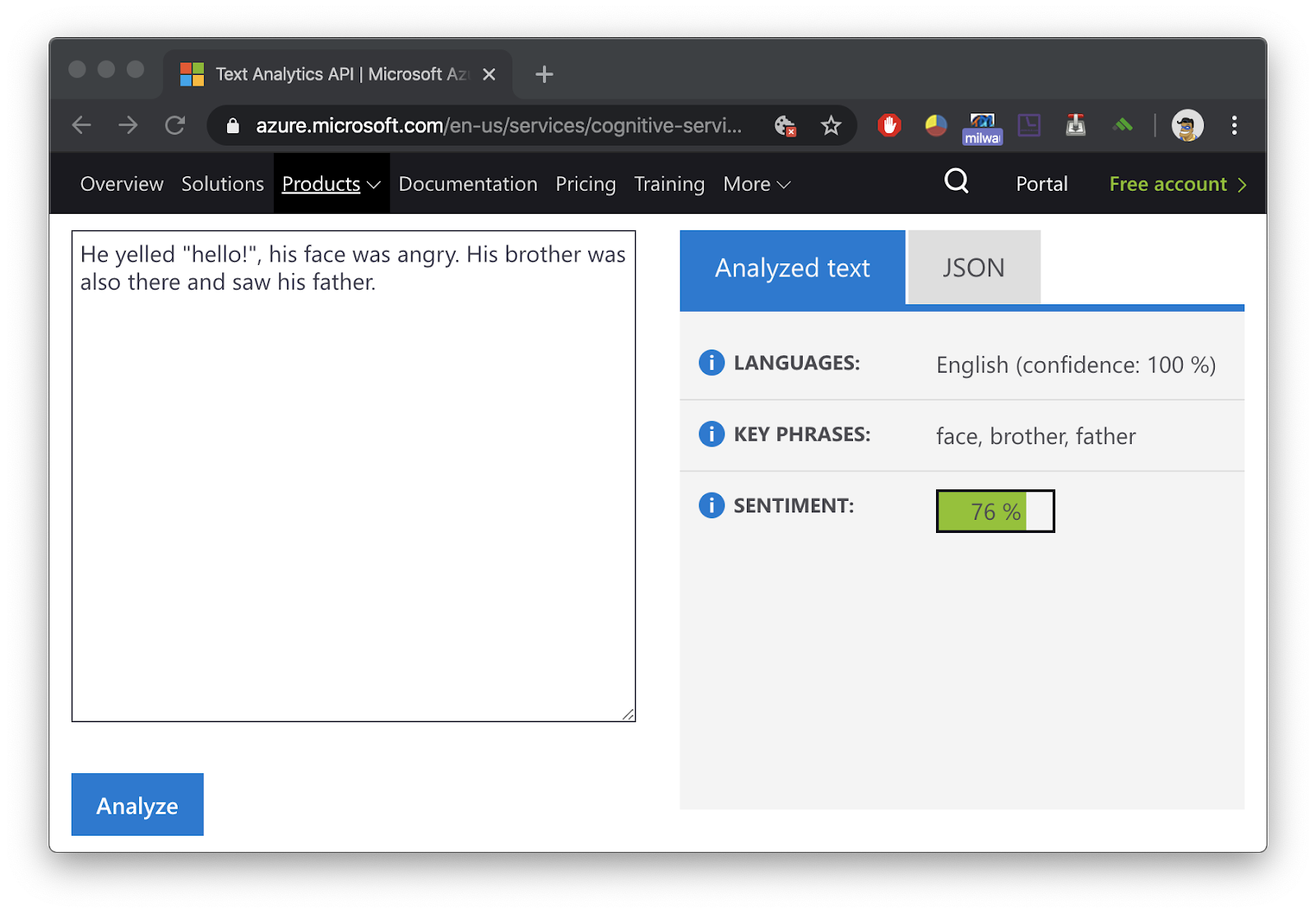

Bias exists in these tools because the people creating them and the data used to train them is biased. You wouldn’t trust a sexist human to help you make objective decisions, so why do we trust sexist bots? Here is another example using Azure’s NLP offering.

Female terms

Male terms

The Future

Being complicit in a literal genocide wasn’t enough to cause substantial change in the Data Science industry. Facebook hired some more human moderators to identify hate speech but ignored the root cause. The root cause was recommendation engines manipulating humans for short term goals, without regard or knowledge of long term effects. But there is hope.

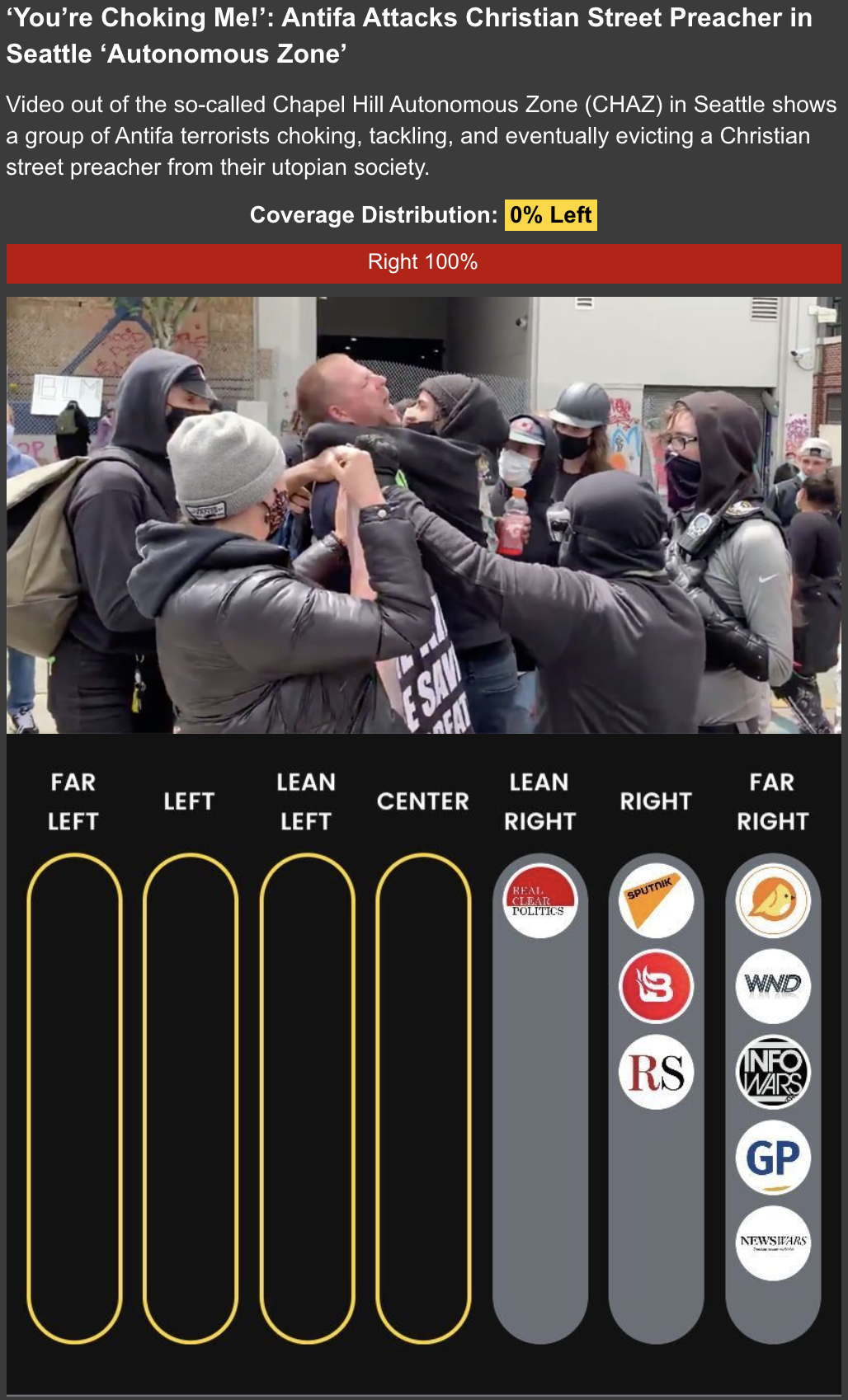

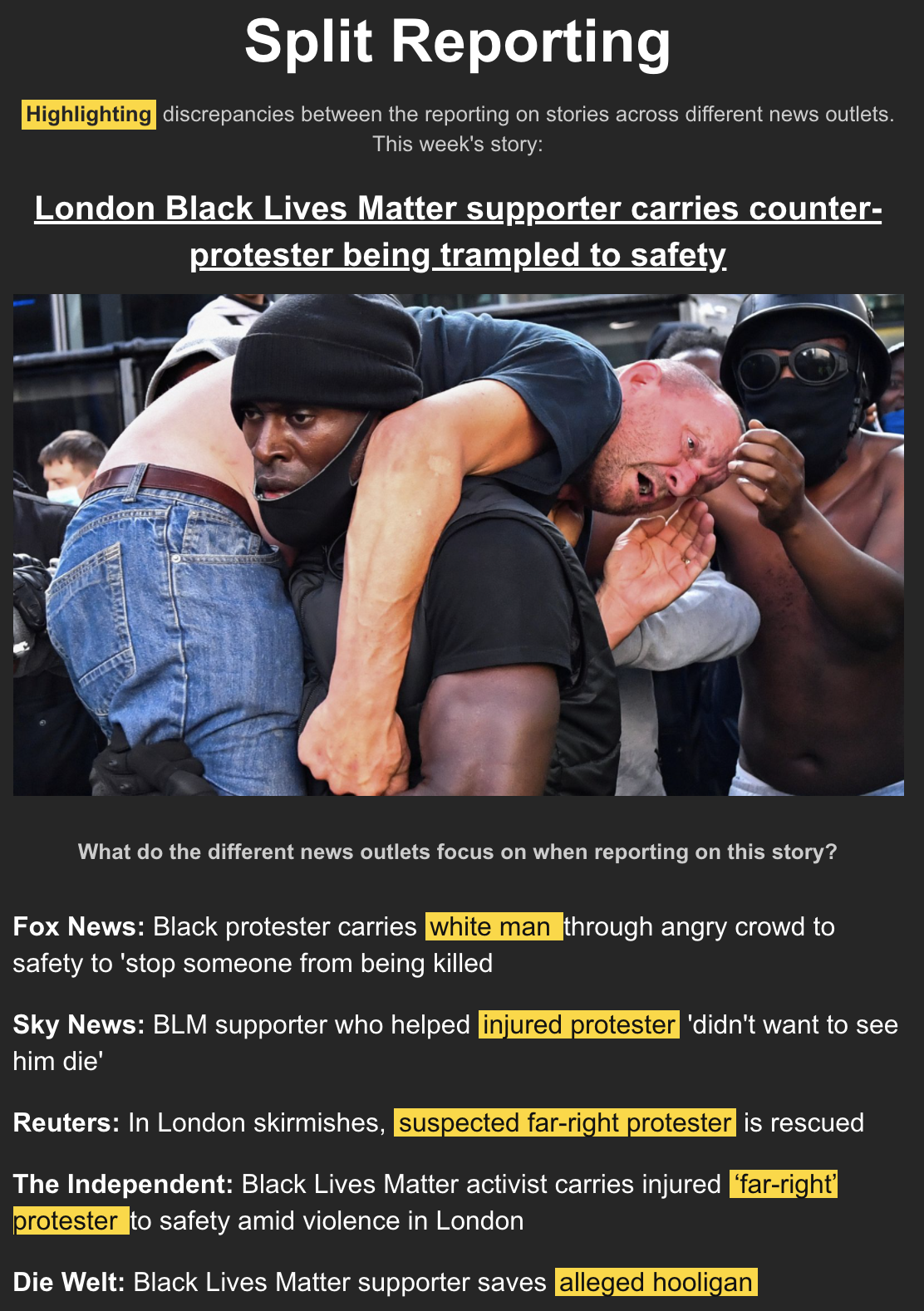

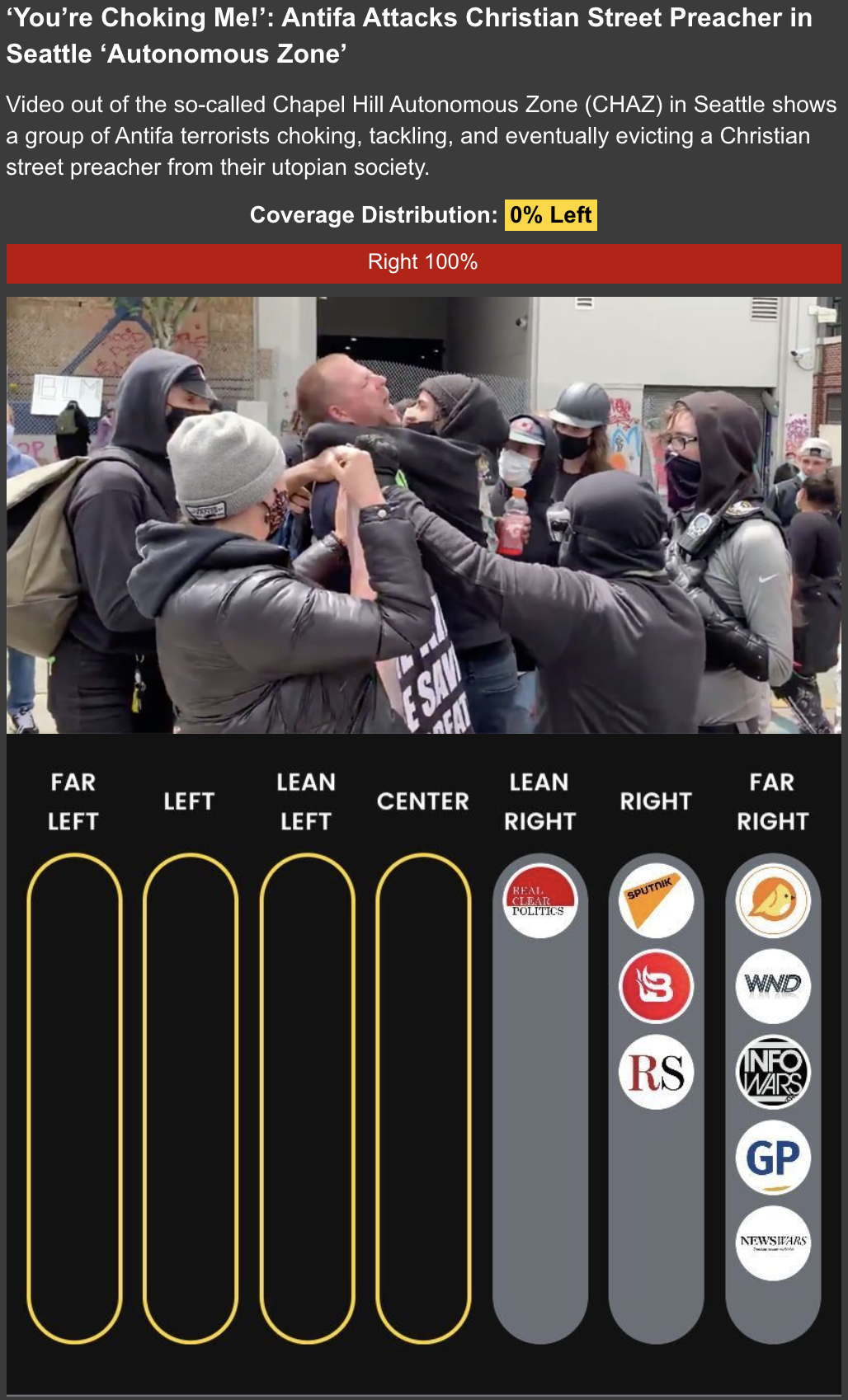

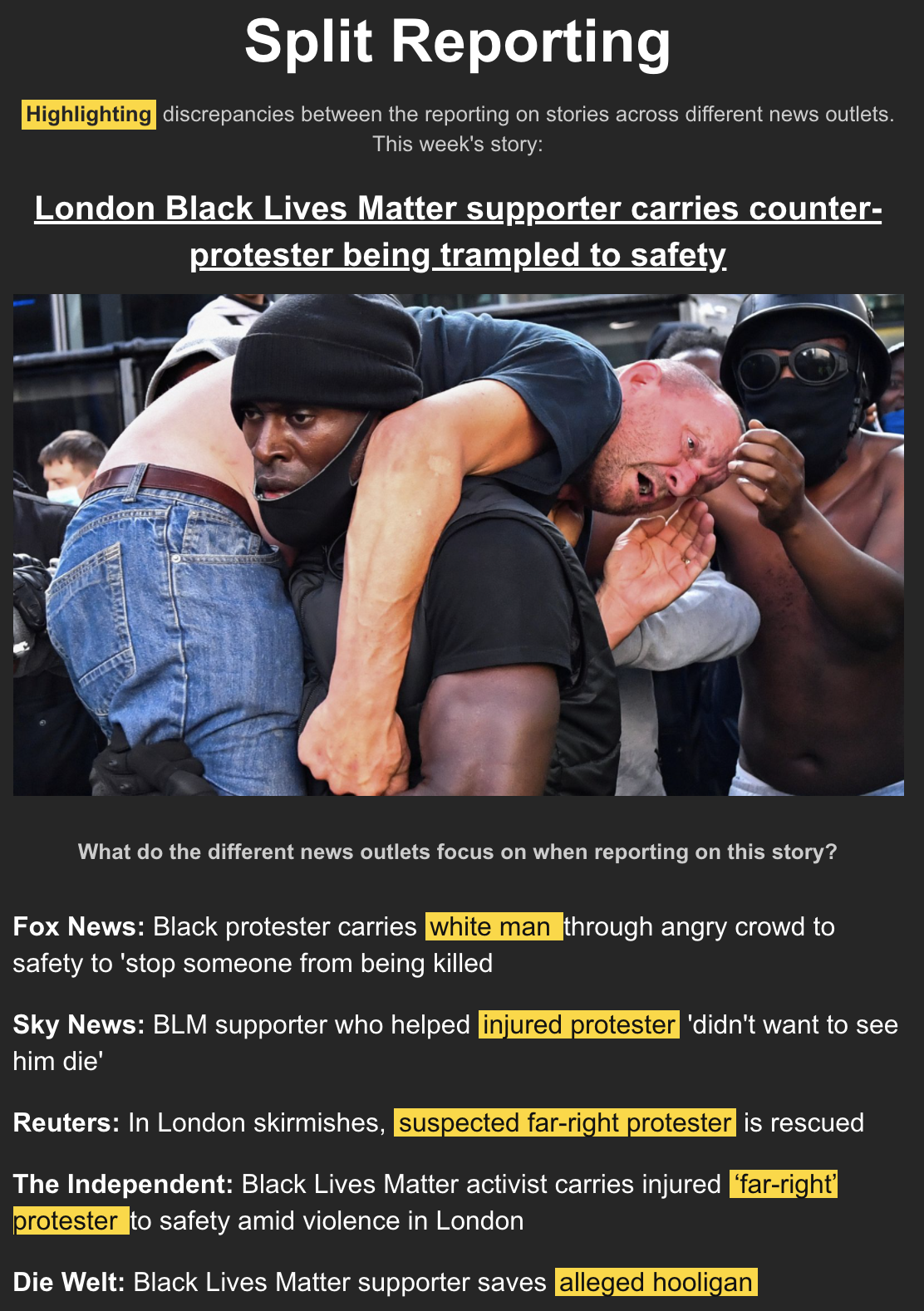

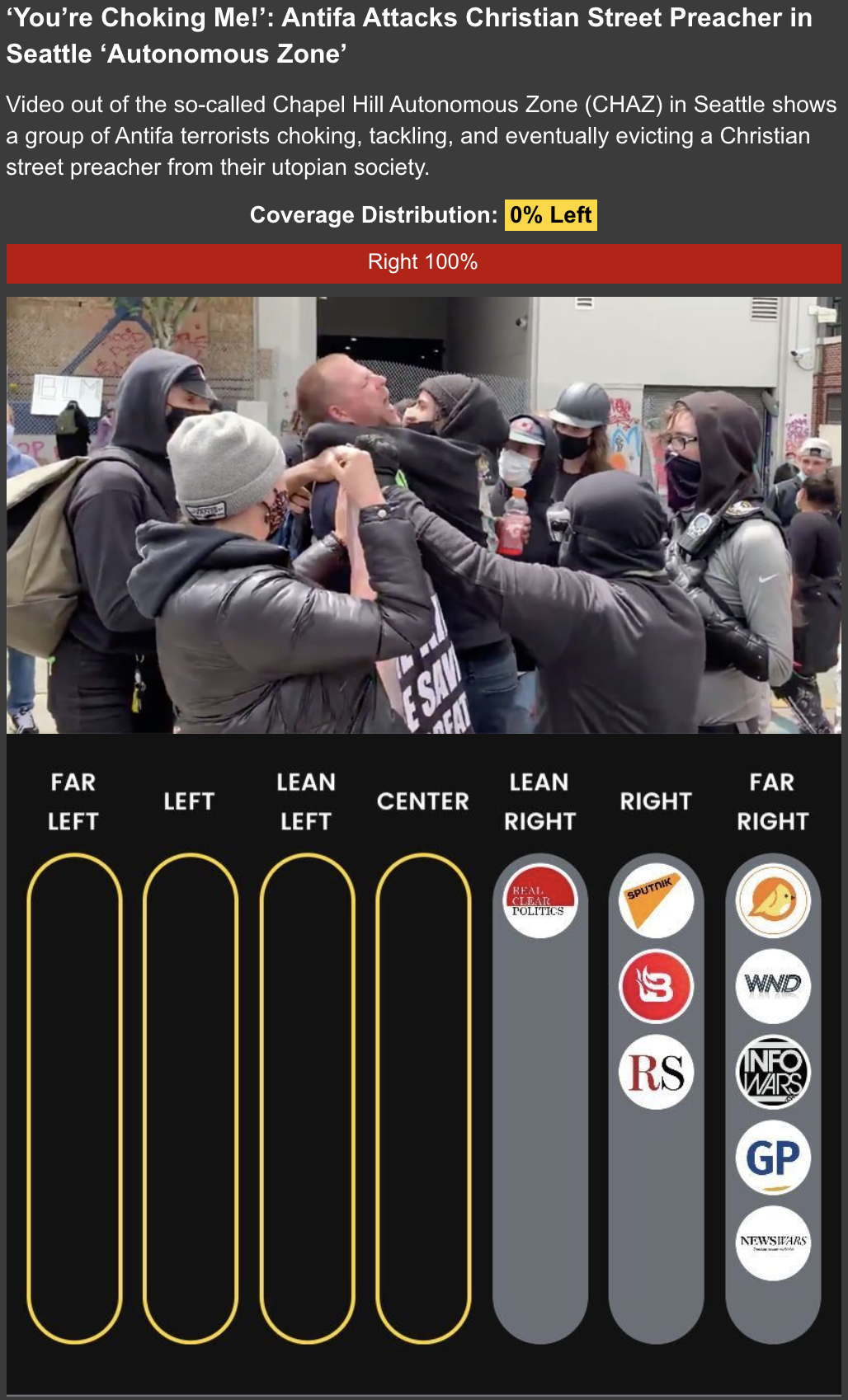

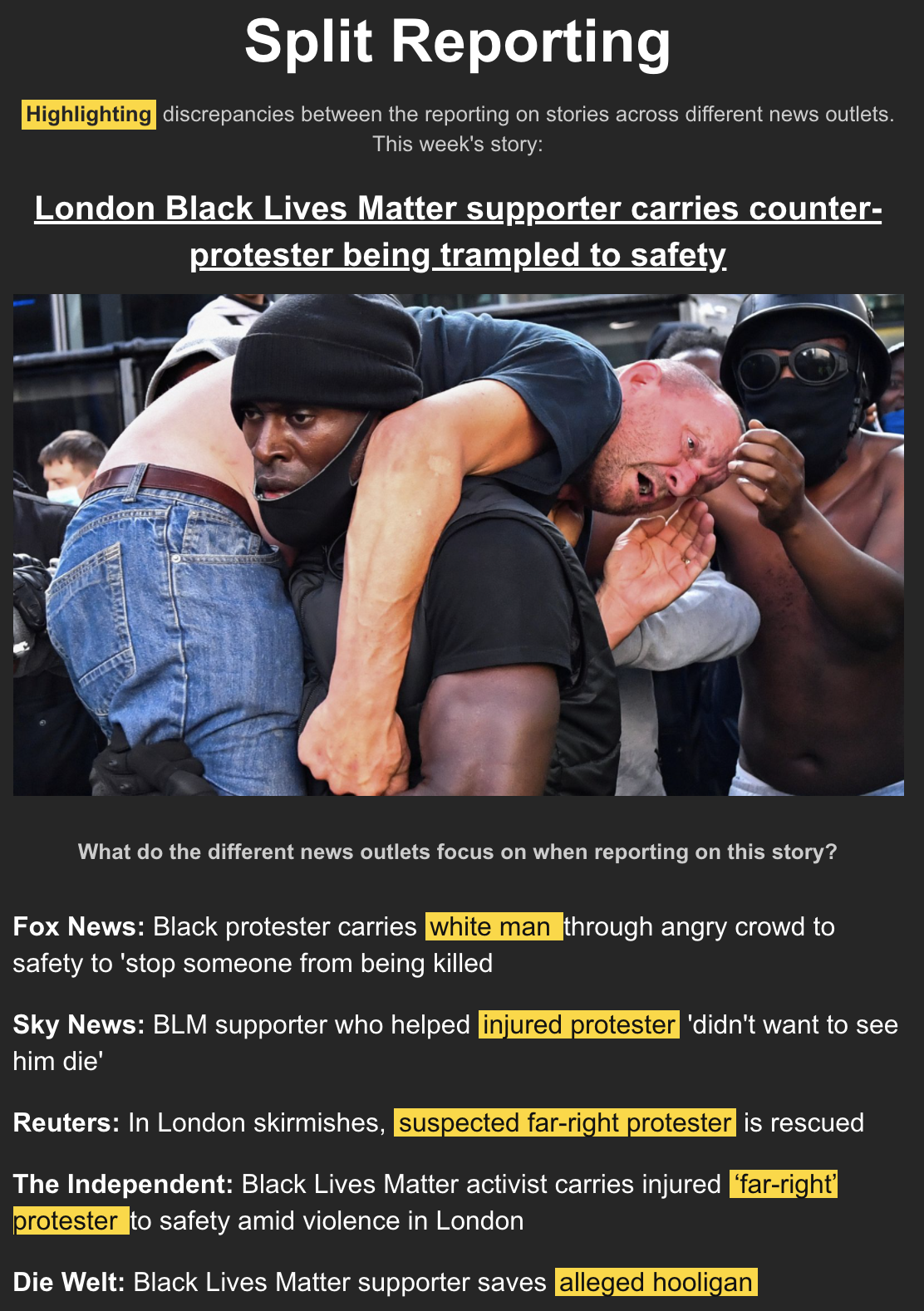

According to the Internet research company Pew Research Center, 62% of adults in the United States get news from social media². Some news outlets have started writing articles for Facebook’s accidentally genocidal algorithms instead of humans. The results are continued filter bubbling and more division. Ground News is a news comparison platform solving this problem. Users can see how a particular story is told across the political spectrum and around the world.

Ground News also have a weekly Blindspot email highlighting notability biased articles and how different media outlets describe the same event or person.

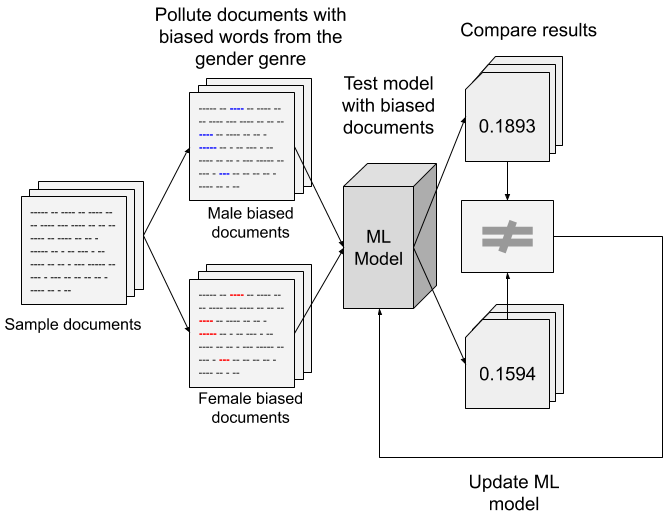

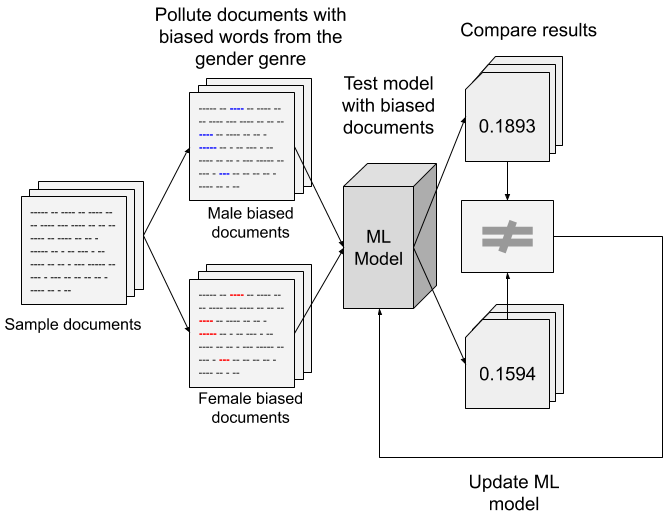

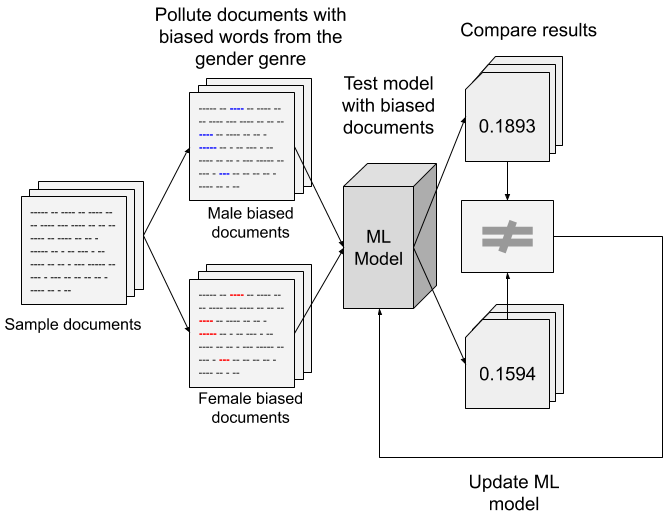

At Shopify, I created a defensive patent to identify & remove bias from machine learning models.

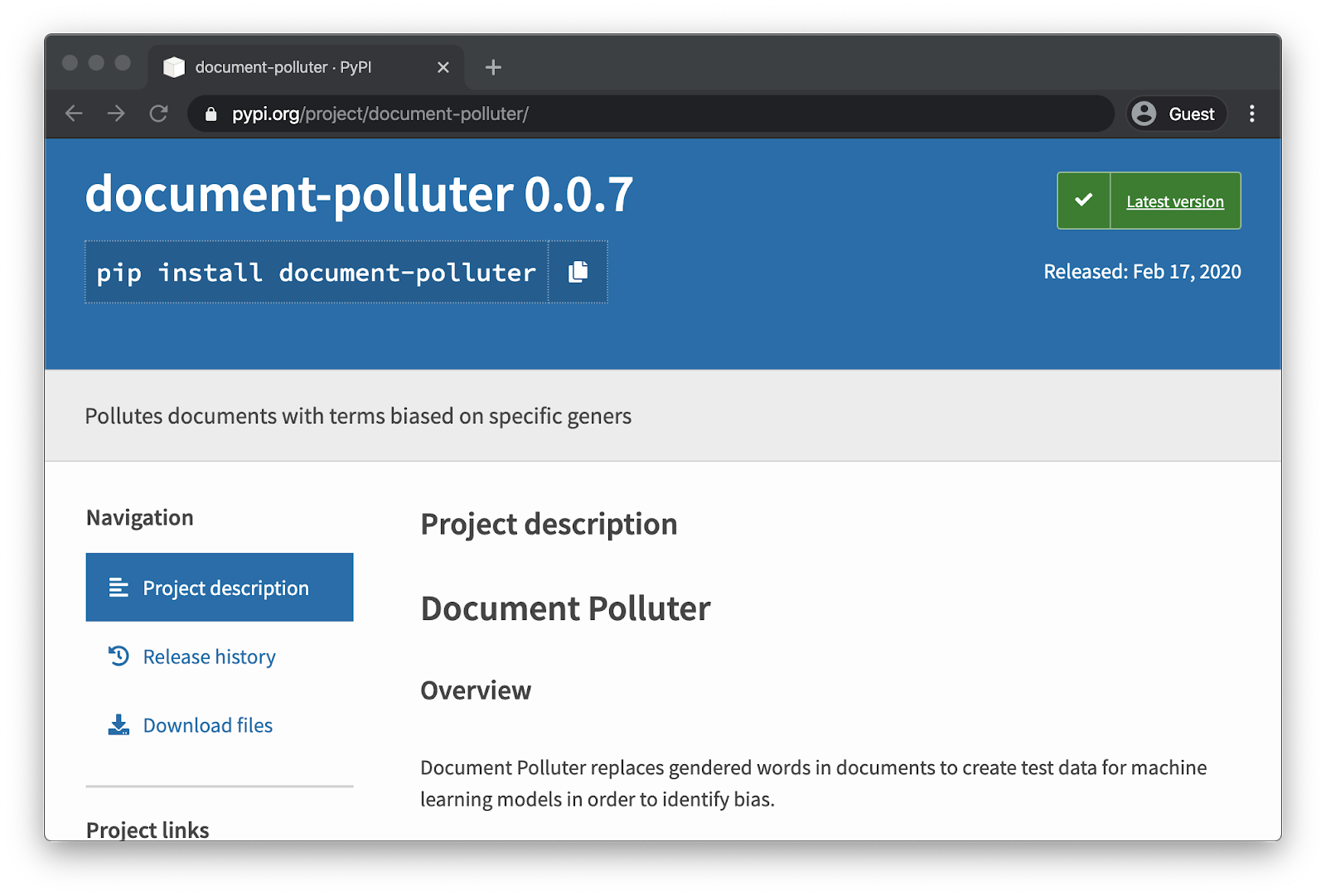

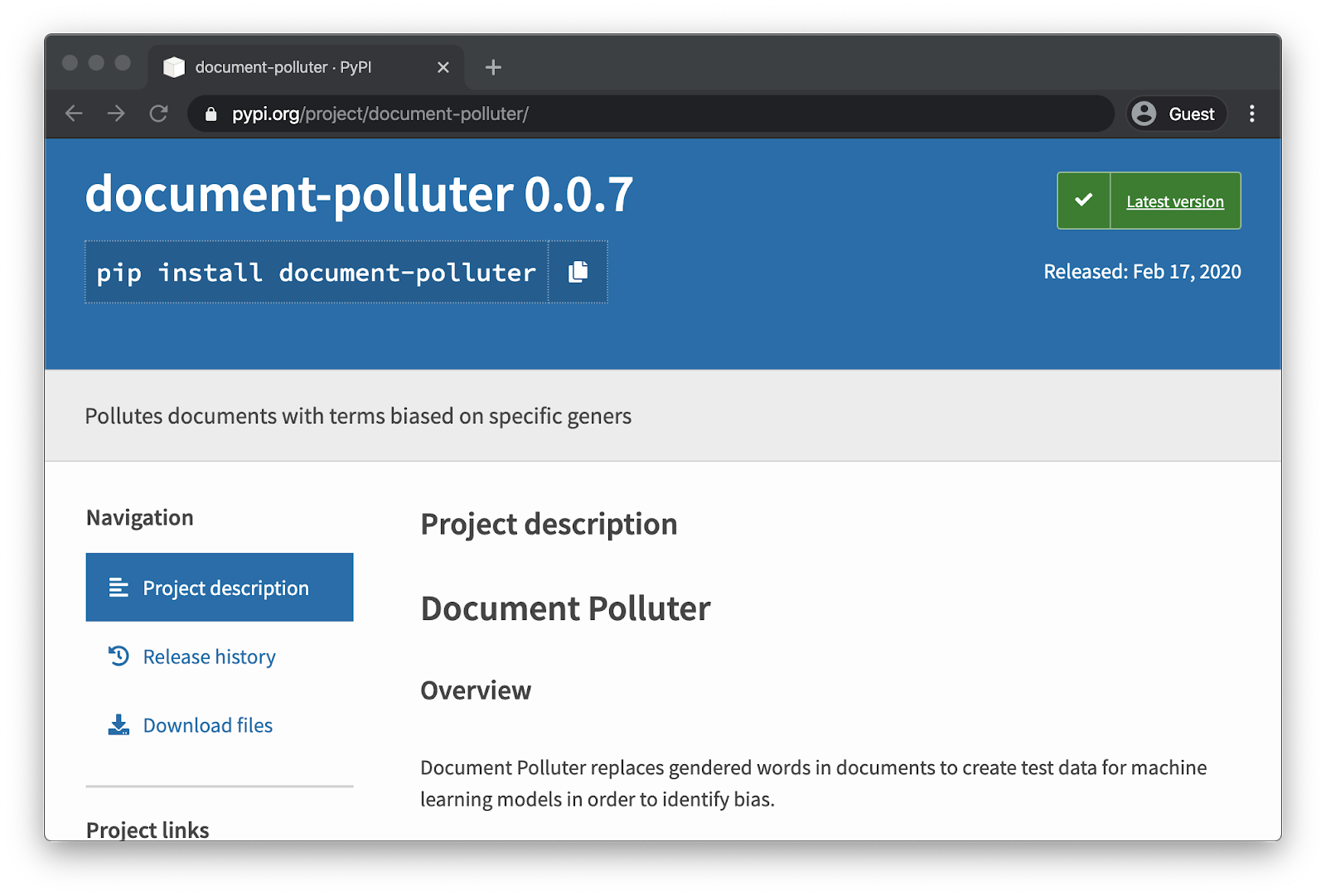

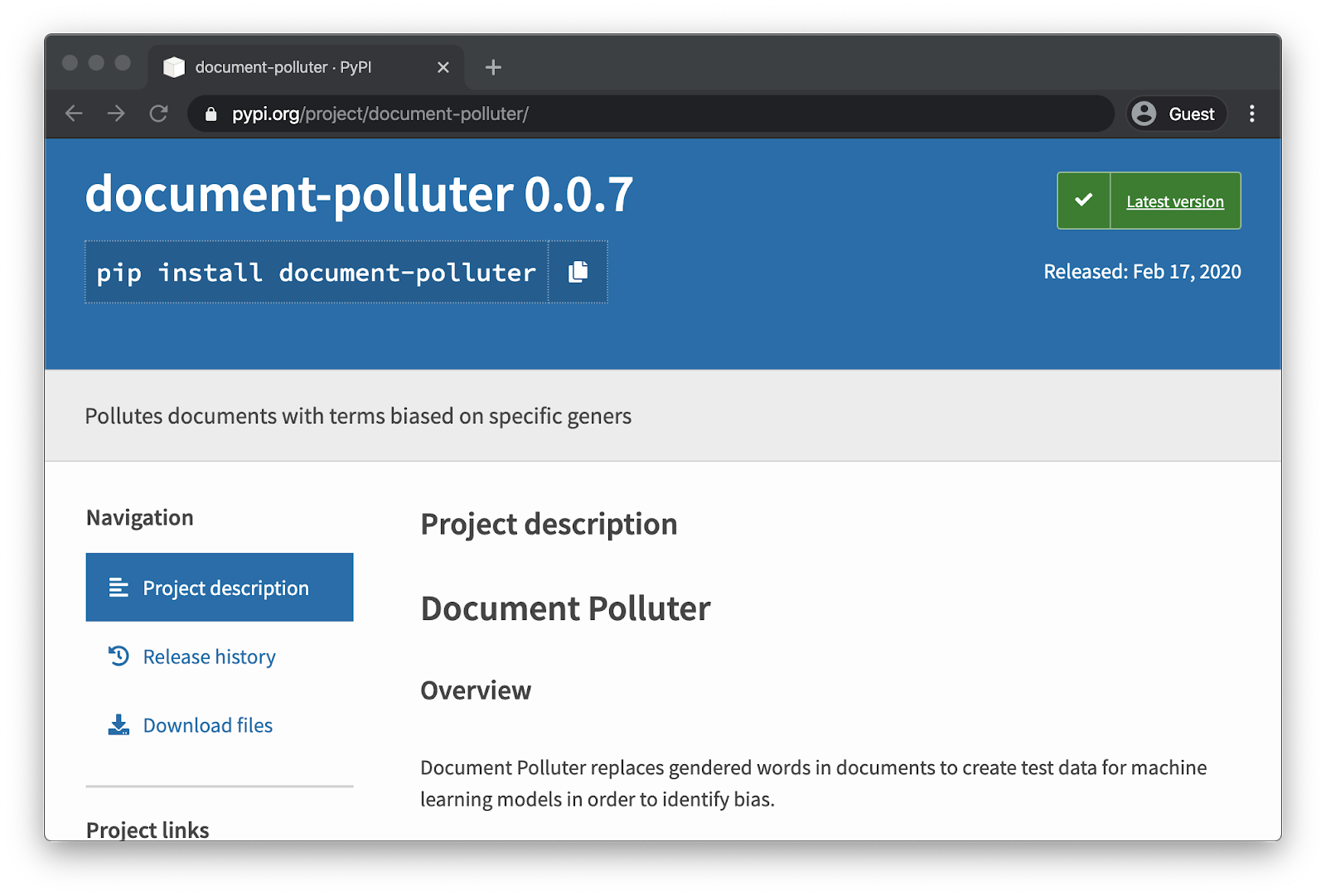

I have been working on a Python package to implement the concept.

There are many in the data science community identifying and solving these problems. It’s important that the public understand that data models are not inherently objective. And it’s imperative that identified issues in data science are resolved at their source. Done right, data science can solve more problems then it creates.

-----BEGIN PGP SIGNED MESSAGE-----

Hash: SHA256

- ---

title: "The Dangers of Turnkey Data Science"

author: Greg

layout: post

permalink: /2020/06/turnkey-data-science/

date: 2020-06-17 19:53:00 -0400

comments: True

licence: Creative Commons

categories:

- category

tags:

- tag

- ---

Data science affects our lives from trivial restaurant recommendations to life changing loan risk predictions. Data science has the ability to improve lives, but like any tool, when misused or misunderstood, it can cause damage.

## The Dark Decade of Data Science

During the 2010s, some of the greatest minds in data science were working at Facebook. Increasing user engagement was the success metric as it led to more eyeballs on ads. Facebook increased user engagement by surfacing content that kept users on the platform. An artifact of Facebook's approach was that users were not exposed to opposing ideas. This simple concept had horrific outcomes. In 2016 the [Rohingya genocide](https://en.wikipedia.org/wiki/Rohingya_genocide) began leading to the deaths of tens of thousands of people in Myanmar. Facebook was largely responsible for spreading and inciting the hatred towards the Rohingya people. Marzuki Darusman, the chairman for the U.N. Independent International Fact-Finding Mission on Myanmar stated that Facebook played a "determining role" in Myanmar[¹](https://www.reuters.com/article/us-myanmar-rohingya-facebook/u-n-investigators-cite-facebook-role-in-myanmar-crisis-idUSKCN1GO2PN).

> "It has... substantively contributed to the level of acrimony and dissension and conflict, if you will, within the public. Hate speech is certainly of course a part of that. As far as the Myanmar situation is concerned, social media is Facebook, and Facebook is social media," -- Marzuki Darusman.

Facebook has changed some policies but hasn't addressed the root cause. Facebook continues to make short term optimizations with little understanding of long term impacts. Consider notification fatigue:

Again, this is the result of short term data driven decision. More notifications equals more engagement, no matter the relevance. This gambit works until users reach a saturation point.

Calling what we have now artificial intelligence is a little premature. If you ask a human librarian for advice on committing suicide, it's unlikely that they would blindly supply you with stats on techniques.

*Searched on 2020-06-14 using guest chrome account and a anon vpn*

Data scientists continue to make mistakes with real world consequences. Turnkey data science now lets anyone make those mistakes.

## Turnkey Data Science, the New Dark Decade of Data Science

Turnkey data science refers to a suite of new tools developed by Google, AWS, Azure, etc to simplify the adoption of data science techniques. The people building these tools are still making mistakes and the people using these tools don't necessarily understand the risks.

Consider Google Cloud Natural Language API which analyzes sentiment from text. An example use case would be monitoring employee morale without having to read every single feedback form. Google have great [tutorials](https://cloud.google.com/natural-language/docs/sentiment-tutorial) on implementing their natural language processor (NLP) however nowhere in their documentation could I find details about possible bias. So I tested their NLP:

Even small samples revealed statistically significant gender bias.

statistics = 1002.000

p value = 0.043

avg female sentiment = -0.12

avg male sentiment = -0.23

*Using Mann–Whitney U test, see [Jupyter notebook](https://github.com/gregology/document-polluter/blob/notebook/Google%20gendered%20driving%20sentiment%20analysis.ipynb) for more details*

Bias exists in these tools because the people creating them and the data used to train them is biased. You wouldn't trust a sexist human to help you make objective decisions, so why do we trust sexist bots? Here is another example using Azure's NLP offering.

Female terms

Male terms

### The Future

Being complicit in a literal genocide wasn't enough to cause substantial change in the Data Science industry. Facebook hired some more human moderators to identify hate speech but ignored the root cause. The root cause was recommendation engines manipulating humans for short term goals, without regard or knowledge of long term effects. But there is hope.

According to the Internet research company Pew Research Center, 62% of adults in the United States get news from social media[²](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6424427/). Some news outlets have started writing articles for Facebook's accidentally genocidal algorithms instead of humans. The results are continued [filter bubbling](https://en.wikipedia.org/wiki/Filter_bubble) and more division. [Ground News](https://ground.news/) is a news comparison platform solving this problem. Users can see how a particular story is told across the political spectrum and around the world.

Ground News also have a weekly [Blindspot](https://ground.news/blindspot-subscribe) email highlighting notability biased articles and how different media outlets describe the same event or person.

At Shopify, I created a [defensive patent](https://en.wikipedia.org/wiki/Defensive_patent_aggregation) to identify & remove bias from machine learning models.

I have been working on a [Python package](https://pypi.org/project/document-polluter/) to implement the concept.

There are many in the data science community identifying and solving these problems. It's important that the public understand that data models are not inherently objective. And it's imperative that identified issues in data science are resolved at their source. Done right, data science can solve more problems then it creates.

-----BEGIN PGP SIGNATURE-----

iQIzBAEBCAAdFiEESYClA57JitMYg1JBb8nUVLEJtZ8FAmIc10EACgkQb8nUVLEJ

tZ+3iBAAhQw+j/9cNXf9vRw8lw2QWmx7E1h0oii33K4Lv20I5ehyZybQiBcfritu

OvhLEDLCBFmOIl6UxNGFLtWjpLixUx59vs0XJEpzKc8pGkoG97vo9fTOg60VwqHq

GIaR2wX3mI1krESoLnYjRTz93jiZJuaguWBPEXsv5YKCdbxyFkObt7JJvb4WbKhe

6vzwZGhe0TTScwjIEVrPDvVnqVL3shuerdXwGqgwAzNgbMeKBf3TtosfjWFOGhPu

vxKya2Amiy1ALURJqVtfgMLdMh7Qew14kMDhbOXir2V+i4/u2WZW5sM9MHufZ5Xd

nXjzhMwIoK/lVq3kE7F7xKa9FnXrbAPTQCV1ujbVe9TdVqI3XX1AmrqvhenFJPwm

k5NHwQ36I21an++u+AoYMvjzLIkREr08C8pO0MPq3QyE6S8tVR8GhVRSFyvv+dk+

xYdSNw/IMoA16QVROT5HeiPQZUurL2eve5Yk8+ckm0T0aaDABvuOffQhnULRu4/l

fUAAocjDVcoAxotmQ4DuyqdbvDAiBGvREzd/J4JRdsxLXooxnhCKMXjcOARK4lLd

TaIaZnQPON4OLBcjfEpeVqkrrdAv+IfGG3jAoHuo/c9CBFuNBGb/3yWF87Yz3+y/

6BztDTtEoX6Vl/rol7WMH+OjcD0WOW1wuruujeoT4isgzJ5QKps=

=HzF3

-----END PGP SIGNATURE-----